My Hive Engine Tokens Snapshot Tool: Now It Becomes Really Useful

In my previous public update for this tool, the most important addition was support for diesel pools.

I guess the tool started to shape up quite well with today's commits. By the way, I'm anxious to see VSC starting to get used, so I can build something for it too.

In the meantime, this is what's new for today's update:

- added persistent caching with a 15 minutes expiration time for Coingecko prices, market and diesel pool data, to avoid hitting the APIs too often (when the script is run for different accounts)

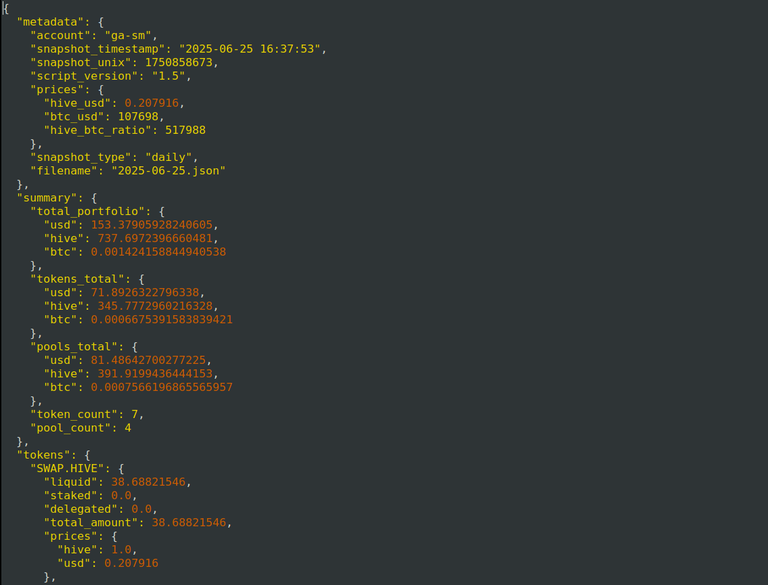

- added save snapshots as JSON files automatically after processing. Here's a nice image:

- created

setup.shwhich:- takes two variables for the project directory and the snapshots base directory (which must be updated to work!)

- takes a list of usernames (which again, needs to be updated for your needs)

- installs Python dependencies

- creates and activates a service and a timer to run our tool for the list of accounts at 8am or later, when the computer is turned on and has access to the internet (

FIXED:I just realized that this shell script runs all accounts with the same set of tokens defined inconfig.pyfor all accounts; I suggest you to use themultiaccount-manual-run.shfor snapshots of a different set of tokens for each account, until I fix this) - tests this setup

- created

multiaccount-manual-run.shwhere someone can add all their accounts and associated lists of tokens and the script will run for each account (and create a snapshot json file for each account); persistent caching helps prevent asking for the same data from APIs multiple times. I added this shell script thinking about people who don't want the snapshots being taken automatically (maybe because they don't want to add a service and a timer on their system or because they want it to run it occasionally) - refactored the code as it grew and I could improve its structure

The structure of snapshots is as follows. Under the SNAPSHOTS_DIR (defined in config.py or taken as an argument in command line), we have:

[SMAPSHOTS_DIR]/[USERNAME]/[SNAPSHOT_TYPE]/timestamped-filename.json

Something like this:

The exact filename depends on the snapshot type. For example, for a weekly snapshot, it would be something like 2025-W26.json.

At this point, after I fix (FIXED!) the oversight about the automated script using the same list of tokens for every account, instead of distinct ones (which can easily be circumvented for the short term by running the multiaccount-manual-run.sh), this side of the tool is pretty much feature complete.

What could be done next would be to use the snapshot JSON files to generate some reports / charts, but that's another big project by itself.

What I can say is that, despite using AIs to help with this project, I learned (or remembered where I already knew somewhere in the back of my mind) a bunch through it.

UPDATES:

- Fixed

setup.shto work with a list of accounts AND the associated list of tokens for each account.- the script also deactivates and removes old service and timer at the beginning, if they were previously set; helpful if you change the code and re-run

setup.sh - I carved out the part about deactivation and removal of the the current service and timer and added it to the shell script

stop-automation.sh, in case someone doesn't want automated snapshots taken anymore

- the script also deactivates and removes old service and timer at the beginning, if they were previously set; helpful if you change the code and re-run

- created an

uninstall.shscript with the obvious functionality (read the updatedreadme.mdon the repo for more info)

Any feedback?

How do you determine the second layer tokens value? I mean it's a market maker so it takes the buy orders value?

Ignore the previous message if you read it.

It's the average between buy price and sell price. If buy price is missing, it is considered 0, so in effect the price of the token is considered half the sell price. If both are missing, the price is 0.

could make a dynamic calculation? tokens with a wide spread or low sale volume i guess its optimistic even the average

I thought about it too. But volatility can go both ways on low volume. And these market movements can be very unpredictable. It's true, if you want to liquidate a high amount of low-liquidity tokens quickly, price goes down significantly, but that could be an assumption. One could have some sell orders at much higher prices, and if the price spikes, tokens are sold at a premium compared to the average being considered as the price of the token. So... it really depends on the user. If they want to discard some of their token holdings, they simply don't include the token and it won't be included in the snapshot, with any price.

I haven't used this tool before, but since you said that using it makes things easier, I will definitely try using it.

Well... I built it. How can I say otherwise? 😄

I think the tool is looking good. Are you having AI refractor the code for you? I remember that being a tedious step, but its good so that the code is more easily maintained.

It helps here and there. But I do more and more myself to avoid running out of free prompts for the day (for multiple models, lol).

It's quite complicated to get the AI involved in this. You have to be smart about it, because otherwise you could run out of prompts after... 1 prompt, and not even that one completed.

Developed always makes a new moment ... thanks for sharing this beautiful content with us 💕

Wow! You are into coding too. Impressive!

!PIZZA

!BBH

I used to. Nowadays... I rely mostly on AI. The coding world moved on while I took a break. But the fundamentals are there.

Great for you. Coding is almost completely foreign to me. 😆😅

!LOLZ

!PIZZA

lolztoken.com

I don’t know, but the flag is a big plus.

Credit: thales7

@gadrian, I sent you an $LOLZ on behalf of rzc24-nftbbg

(2/10)

Delegate Hive Tokens to Farm $LOLZ and earn 110% Rewards. Learn more.

$PIZZA slices delivered:

@rzc24-nftbbg(3/10) tipped @gadrian (x2)

Come get MOONed!