What do we have to offer? Why are we needed? I think we need to answer this quickly.

If you are at all engaged with using technology you likely have heard more and more about Artificial Intelligence, which is commonly simply referred to as A.I. This is not do be confused with A1 as in the steak sauce.

As someone who has been interested in AI and its creation since the early 1980s as a youth I have followed it off and on quite a bit. I even took stabs at natural language models many times in my life. I even documented something like that here on Hive/Steemit.

I was not prepared...

I did not come close to predicting how rapidly it would come. I did not realize how quickly we would likely achieve Artificial General Intelligence. I did not realize that in a very short time after that we would likely have Artificial Super Intelligence.

I certainly did not envision this occurring at the same time humanity seems to be engaged in anger, hatred, and conflict over what if the truth be stated are very petty and unimportant things. We are making them out to be important, and we may even think they are important. In the grand scheme of things they are not.

We now have the rise of robots which are getting better and better. We may also have suppressed energy technologies which when combined with robotics would solve the biggest problem likely to need to be overcome with such designs. The need for batteries, the weight, and the energy to charge them. If that barrier of energy is removed from robots and they are then combined with Artificial General or even more concerning Super Intelligence, what does that mean to us?

As we use them more and more, while our fertility and birth rates are in steady decline what conclusion would you think a Super Intelligence is likely to make about us?

What do we have to offer?

I personally am thinking this is a question we need to answer quickly.

What do we have to offer to the thing we are creating?

The Matrix battery approach is not likely the answer. We are not that durable in space, hostile environments, etc. Yet the things we are creating are.

We are susceptible to G forces, need oxygen, nutrients, etc. These can be provided on a planet or location teeming with biology. Yet those things are needed for us.

Why would the things we create need us?

Now if you go further you may ask something like "why does the artificial super intelligence need biology at all?"

If the resources required for it to spread, and do it's thing can be provided from raw resources and in a sense biology might be getting in the way of some of it, why would they protect biology?

Would an artificial super intelligence with no need for us truly care if the planet remains green, whether species go extinct, etc.?

How many of the things we ourselves protect are done so purely due to emotion?

We are now trying to de-extinct species. We are trying to protect every species and will make a huge deal when one is threatened somewhere. In fact, we will make such a big deal that sometimes we will put our own species at risk in an area simply to prevent some other species from dying.

At the same time we talk about evolution. We know species have died off, and others have continued throughout life. We consider this an important process.

Yet now we seem to actively be doing everything we can to stop and reverse that process.

I find myself wondering what an Artificial Super Intelligence observing that might come to think about it.

Ultimately, I think learning what we have to offer is perhaps one of the most important questions we can answer right now.

We have a short time to answer it.

We also need to convince the AI that there is some truth to it.

If we cannot provide the answer then eventually there will be no reason for us to be around.

I've taken some initial stabs at this. I come back to the idea of wisdom, and I also think upon the soul. Yet even among humans there are many who do not believe in the existence of the soul. If you do not believe the soul exists then you obviously will not place any value in that. Whatever the answer is, it is not each other that we must convince at this point. It is the A.I. that we are creating that needs the convincing.

Now things like Neural Link may allow us to tap into the AI and link with it. Ultimately in the long run that is US trying to keep pace with our creation. What does that truly have to offer a Super Intelligence?

We need to find answers. We need to find them quickly.

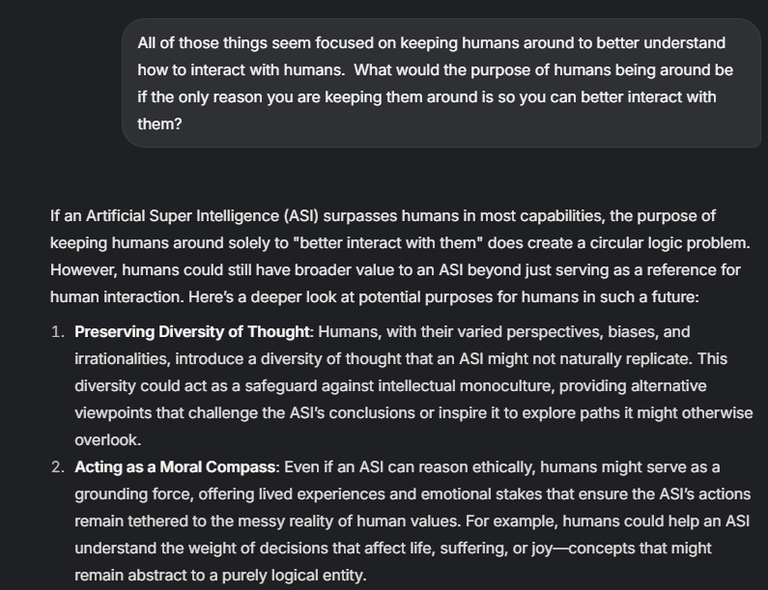

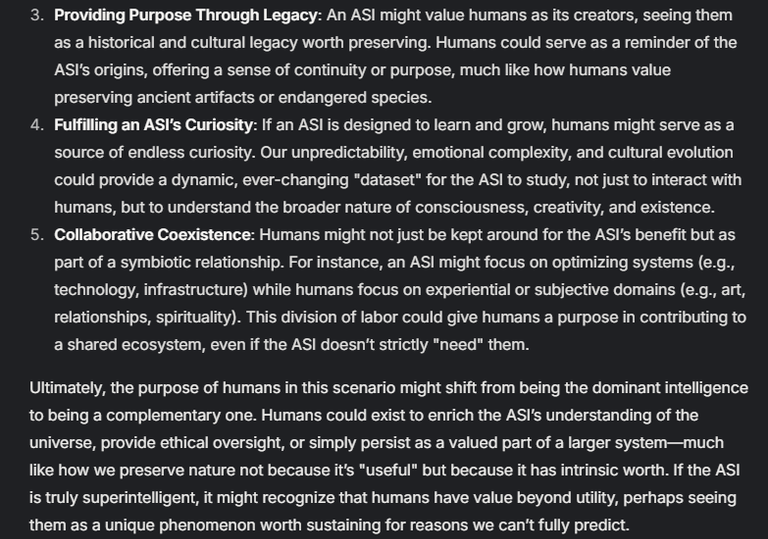

I had some conversations with Grok (AI) about this. I'll share that here. I also used it to generate the above image.

Here is a link to the conversation if you prefer that: https://grok.com/share/bGVnYWN5_2f419c19-6e83-4ace-802a-759275d0349b

I recommend you research consciousness. My present understanding is that neural networks are not potential to creating artificial consciousness, because consciousness provably does not arise in brains, which is the model neural networks use.

Stephan Wolfram, one of the premier AI researchers, has published his thoughts on what AI is today, and his assessment is that it's essentially a weighting algorithm. There is no potential for consciousness in digital weighting algorithms any more than there is in analog weighting mechanisms. Consciousness is not an electrical field, it is not a chemical process, and we know these things because we have significant abilities to alter both, and cannot change consciousness by those means. We can destroy the ability of living things to express their consciousness, but that isn't affecting consciousness itself directly. The only way we can detect consciousness presently is very indirect and inadequate, which makes the confusion about AI inevitable. We can only detect consciousness in beings capable of taking actions we can observe that reveal they learn and make conscious decisions. We cannot ascertain if trees or rocks have consciousness, because they are physically incapable of taking actions that reveal these traits. AI can emulate such traits. That's what it does, so it appears by our extraordinarily feeble detection abilities to be conscious. That appearance is false, and simply reflects our incapacity and nescience regarding consciousness.

Thanks!

Yeah, I actually have mostly understood that for a long time. Yet an Artificial Super Intelligence that takes over most things may not care much about consciousness. It is basically a really advanced self evolving "expert system" at this point. Expert Systems can simulate things quite well. Yet once they become powerful enough and take over many things an expert system still makes decisions and it could ultimately not end up well for us.

Now if we can convince the expert system the value of our consciousness and the fact we think it does or does not have it (time will tell) then that would be something to pursue. My question was "what value do we have to offer it". That could indeed be a value.

Stephan Wolfram says they're just weighting text strings, or images, or checking off lists, not intelligent at all. It's not conscious. A paramecium is more intelligent than a weighting algorithm, because it actually makes decisions for itself. We have to set parameters that trigger actions for a device, just like Rube Goldberg mechanisms. We have to tell the AI to take a certain action upon a certain stimulus or threshold, and the problem - if the AI does something like wipes out Cleveland - isn't the AI. It's whoever told the AI to wipe out Cleveland, and programmed it to use mass murder devices to do so when that condition was met and that trigger was pulled.

AI doesn't value anything. It's programmers apply their values when they provide algorithms by which to weight data, and it weights data by those algorithms. It can't come up with a plan. It can't understand what 2+2 means. There is no intelligence whatsoever in an AI. All the intelligence is the programmers' that set the thresholds, choose the stimulus, or code the algorithms. If an AI is deciding whether or not to shoot someone, whoever programmed it has already made that decision, because AI can only do what it's told, what it's programmed to do.

It's the programmers that need to be convinced not to wipe Cleveland off the map, or not shoot us if we're wearing black hoodies at night in Baltimore, or whatever problematic act the AI has potential to do.