My Node Fell Behind... Because I Didn't Follow My Own Advice! (Fixing HE Config with a Script)

Well, had one of those slightly embarrassing facepalm moments recently. I noticed my Hive-Engine node was seriously lagging – checking the status, it was something like 13 hours behind the main Hive chain. Definitely not ideal for keeping up with sidechain operations! After scratching my head for a bit, the likely reason dawned on me...

For the past few weeks, I've been building libraries (nectarflower-js/go/rust, hive-nectar) and writing posts all about the benefits of using the on-chain node lists maintained by @nectarflower in their account metadata. It's a great way to get a list of reliable, benchmarked Hive API nodes. And guess what I hadn't done for my own Hive-Engine node? Yep. I hadn't bothered to make sure its own configuration file (config.json for the hivesmartcontracts software) was actually using that fresh list of nodes in its streamNodes setting. My own node was likely trying to stream from stale or poorly performing Hive nodes. Time to practice what I preach!

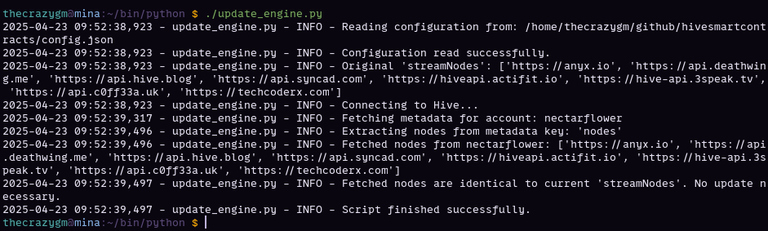

So, to fix my immediate problem and automate this going forward, I put together a Python script. The goal is simple: fetch the current list of recommended nodes from @nectarflower's metadata and automatically update the streamNodes list within my Hive-Engine node's config.json file.

It's a fairly straightforward script, built using my hive-nectar library (eating my own dog food). Here's the high-level process:

- Read Existing Config: It opens the

config.jsonfile (I have it hardcoded to/home/thecrazygm/github/hivesmartcontracts/config.jsonfor my setup) and loads the current settings. - Fetch Fresh Nodes: It uses

hive-nectarto connect to Hive and pull thejson_metadatafrom the@nectarfloweraccount. - Extract Node List: It specifically looks for the

nodeskey within that metadata and grabs the list of node URLs. - Compare & Update: It compares this newly fetched list against the list currently assigned to the

streamNodeskey in the loaded config. - Write Back (If Changed): If the lists are different, it updates the

streamNodesvalue in the Python dictionary representing the config and writes the entire updated configuration back to theconfig.jsonfile, nicely formatted.

It also includes logging so I can see what it's doing and some error handling just in case the file is missing, the JSON is bad, or the metadata doesn't contain the expected key.

For those curious about the implementation, here's the script:

#!/usr/bin/env -S uv run --quiet --script

# /// script

# requires-python = ">=3.13"

# dependencies = [

# "hive-nectar",

# ]

# ///

import json

import logging

import os

import sys

from nectar.account import Account

from nectar.hive import Hive

# --- Configuration ---

# Path to config to update

# Ensure this path is correct and the script has write permissions

CONFIG_PATH = "/home/thecrazygm/github/hivesmartcontracts/config.json"

ACCOUNT_NAME = "nectarflower"

CONFIG_KEY_TO_UPDATE = "streamNodes" # The key in config.json to update

METADATA_KEY_FOR_NODES = "nodes" # The key in account metadata containing the nodes

# --- Logging Setup ---

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s - %(name)s - %(levelname)s - %(message)s",

handlers=[logging.StreamHandler(sys.stdout)],

)

logger = logging.getLogger(os.path.basename(__file__))

# --- Main Logic ---

try:

# 1. Read existing config

logger.info(f"Reading configuration from: {CONFIG_PATH}")

try:

with open(CONFIG_PATH, "r") as f:

config = json.load(f)

logger.info("Configuration read successfully.")

original_nodes = config.get(CONFIG_KEY_TO_UPDATE, []) # Use .get for safety

logger.debug(f"Original '{CONFIG_KEY_TO_UPDATE}': {original_nodes}") # Log original only if needed

except FileNotFoundError:

logger.error(f"Configuration file not found: {CONFIG_PATH}")

sys.exit(1)

except json.JSONDecodeError as e:

logger.error(f"Error decoding JSON from {CONFIG_PATH}: {e}")

sys.exit(1)

# 2. Connect to Hive and get account data

logger.info("Connecting to Hive...")

hv = Hive()

logger.info(f"Fetching metadata for account: {ACCOUNT_NAME}")

acc = Account(ACCOUNT_NAME, blockchain_instance=hv)

account_metadata = acc.json_metadata

if not account_metadata:

logger.error(f"Failed to retrieve metadata or metadata is empty for {ACCOUNT_NAME}.")

sys.exit(1) # Exit if no metadata

# 3. Extract nodes from metadata

logger.info(f"Extracting nodes from metadata key: '{METADATA_KEY_FOR_NODES}'")

if METADATA_KEY_FOR_NODES not in account_metadata:

logger.error(f"Metadata for {ACCOUNT_NAME} does not contain the key '{METADATA_KEY_FOR_NODES}'. Metadata: {account_metadata}")

sys.exit(1) # Exit if key is missing

new_nodes = account_metadata[METADATA_KEY_FOR_NODES]

if not isinstance(new_nodes, list): # Basic validation

logger.error(f"Expected '{METADATA_KEY_FOR_NODES}' to be a list, but got {type(new_nodes)}. Value: {new_nodes}")

sys.exit(1)

logger.info(f"Fetched nodes from {ACCOUNT_NAME}: {new_nodes}")

# 4. Update the config dictionary (only if nodes have changed)

if new_nodes == original_nodes:

logger.info(f"Fetched nodes are identical to current '{CONFIG_KEY_TO_UPDATE}'. No update necessary.")

else:

logger.info(f"Updating '{CONFIG_KEY_TO_UPDATE}' in configuration...")

config[CONFIG_KEY_TO_UPDATE] = new_nodes # <-- The update step

# 5. Write the updated config back to the file

logger.info(f"Writing updated configuration back to: {CONFIG_PATH}")

try:

with open(CONFIG_PATH, "w") as f:

json.dump(config, f, indent=4) # Use indent for pretty-printing

logger.info("Configuration file updated successfully.")

except IOError as e:

logger.error(f"Failed to write configuration file '{CONFIG_PATH}': {e}")

sys.exit(1)

except Exception as e:

# Catch any other unexpected errors (e.g., Hive connection issues)

logger.exception(f"An unexpected error occurred: {e}") # Use logger.exception to include traceback

sys.exit(1)

logger.info("Script finished.")

(Side note: Using uv run --script with the dependency metadata right in the header is pretty slick for simple, self-contained utility scripts like this!)

After running this script, it updated my config.json with the fresh node list. I restarted my Hive-Engine node, and sure enough, it connected to healthy Hive peers and chewed through that 13-hour backlog pretty quickly. Problem solved!

So, the lesson learned today is to actually use the tools and methods you advocate for, even on your own infrastructure! Maybe I'll stick this script on a cron job to run periodically... Hope this tale of woe and the script itself might be helpful for other Hive-Engine node runners out there.

As always,

Michael Garcia a.k.a. TheCrazyGM

Very cool, my friend, both for the usefulness of the libraries, and the slick automation! I really appreciate how clean and organized your code is! It's so easy to read, and I'm not even a coder yet! 😁 🙏 💚 ✨ 🤙