NVIDIA’s GPUs and the Rise of Confidential AI Computing

Created using https://www.canva.com/

Confidential AI Computing: Securing User Data from AI Service Providers

Today, technological frameworks have been established to power confidential AI computing processes. This advancement allows users' sensitive data to be protected from AI application service providers and the infrastructure where these applications are hosted, be it in the cloud, at the edge, or on remote/shared infrastructure.

For example, confidential AI computing will safeguard user data entered as prompts from being exposed to ChatGPT application providers and the infrastructure frameworks, such as Microsoft Azure, that provide the computing power, storage, and other essential solutions for operationalizing AI processes.

Secure AI: Safeguarding Enterprise AI Applications from Unauthorized Access

As the AI era matures, more enterprises will integrate AI into their operational processes. It is crucial that the AI models and applications developed for enterprise use cases are secured from unauthorized access.

Both the data used to train these Large Language Models (LLMs) and the algorithms that power their functionalities need protection from external tampering.

Technological Frameworks for Confidential AI Computing Already in Place

The good news is that technological frameworks supporting confidential and secure AI functions are already operational. Companies like Continuum are providing platforms to enable secure and confidential AI computing processes. Furthermore, Secret AI is developing frameworks for Secure, Confidential AI computing as well.

In this post, we will explore one of the essential technological aspects required for confidential AI computing processes: the GPU framework designed to ensure secure, data-privacy-compliant confidential AI computing.

Confidential Computing Powered by NVIDIA's Specialized GPUs

NVIDIA has developed GPU infrastructure that supports confidential AI computing processes, specifically through NVIDIA Blackwell and NVIDIA Hopper GPUs. These GPUs allow the isolation of Virtual Machine from confidential computing processes that occur within a Trusted Execution Environment (TEE).

A TEE is a secure and sandboxed environment where AI confidential computing processes take place. Within the TEE, data processing remains inaccessible to system components, including the operating system (OS), computer operators, and blockchain system validators.

NVIDIA's GPUs for Confidential AI Computing Processes

NVIDIA’s GPUs—Blackwell and Hopper—are essential infrastructural components, along with TEEs, that power AI confidential computing processes.

Use Cases for Confidential AI Processing

This framework enables user prompts to be encrypted and kept confidential from AI application operators and infrastructure providers. The AI applications can operate on any platform while ensuring that user data is exclusively processed within TEEs.

Source

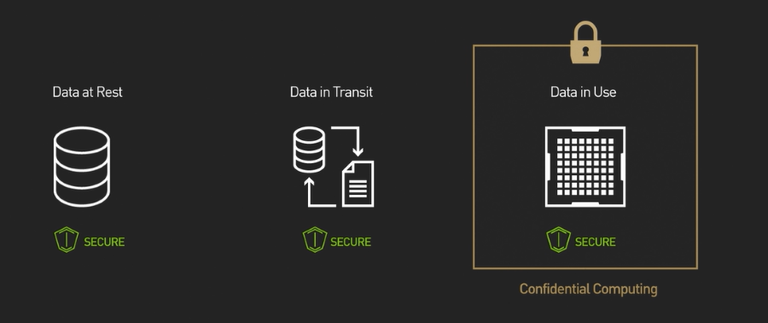

NVDIA’s specialized GPUs capable of powering confidential computing processes that secures data in use

Additionally, LLMs can be trained confidentially, with training data uploaded to TEEs. The confidential processing of AI workloads is facilitated by NVIDIA's Blackwell and Hopper GPUs. Inference processes will rely on secured data that is not publicly accessible, thus preventing tampering by unauthorized parties.

Hardware Authenticity Verification Embedded

NVIDIA’s confidential computing features include attestation services that verify the authenticity of compute assets with a zero-trust architecture.

Confidential AI Computing Powers Secure AI

NVIDIA’s confidential computing GPUs can process AI workloads with performance levels comparable to unencrypted GPU frameworks.

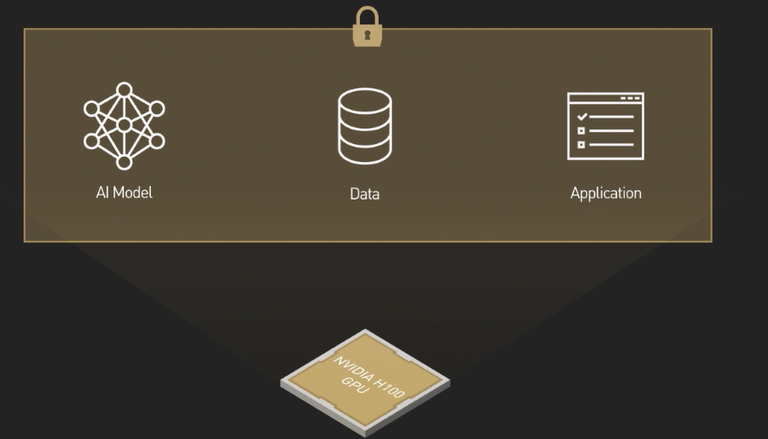

Confidential computing provides additional safeguards against unauthorized access to AI model systems and applications. This secure environment protects the data and algorithms used by AI applications from tampering.

Source

Confidential Computing safeguards AI models & apps by protecting data and algorithms from unauthorized access and external tampering

Furthermore, confidential computing enables secure Multi-Party Computation, allowing different parties to collaborate and train AI models without the risk of unauthorized access to other AI models or data attacks.

For a more detailed explanation of the GPU infrastructure that powers confidential AI computing processes, please refer to this article.

https://www.nvidia.com/en-us/data-center/solutions/confidential-computing./

My next article will explore the confidential AI computing solutions provided by Secret Network and Continuum.

Thank you for reading!

Posted Using INLEO

This post has been manually curated by @bhattg from Indiaunited community. Join us on our Discord Server.

Do you know that you can earn a passive income by delegating to @indiaunited. We share more than 100 % of the curation rewards with the delegators in the form of IUC tokens. HP delegators and IUC token holders also get upto 20% additional vote weight.

Here are some handy links for delegations: 100HP, 250HP, 500HP, 1000HP.

100% of the rewards from this comment goes to the curator for their manual curation efforts. Please encourage the curator @bhattg by upvoting this comment and support the community by voting the posts made by @indiaunited.

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.