Establishing a reference for human vs bot team submission times in Splinterlands

In this post I documented how we can investigate supicious accounts with publicly available data on team submission times in Splinterlands. As part of that, and based on some feedback, I realized that I did not really know how bots behave.

- Do they submit their team quickly or slowly, or something in between?

- And how do human players behave?

So in this post, I will use team submission data for a large number of battles to establish these references. Once we know what a human typically plays like, and what a bot typically does, it will be easier to use team submission times as an indicator of bot activity in Splinterlands, for the modes where this is not allowed by the TOS.

Also, the data is just interesting to look at, and I can use it to learn some new statistics methods. This time I learned about KMeans with scikit-learn.

The data was collected from the Splinterlands API with the same approach as outlined in the last post. All numbers reported here are the durations from finding an opponent for a match, to the team was submitted.

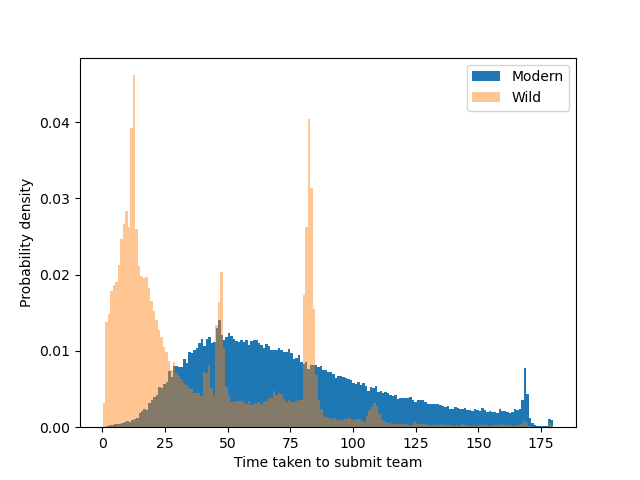

Submission time distribution, Modern VS WILD

Based on 100 000 matches in wild and modern, here is the distribution of team submission times in the two formats:

There is a very clear distinction between the two formats. That is hardly surprising, since wild consists of somewhere around 95% bots, according to one SPL team member, and modern is dominantly human players.

In modern, most matches are submitted around the 50 seconds to 1 minute mark, but the tail is longer on the late submission side.

For wild, the most common submission time is just about 12 seconds, and there are also some spikes in the distribution, probably representing different types of bots. It is extremely uncommon for bots to submit later than 2 minutes in.

We can have more fun with this data. Lets see what machine learning can tell us about the types of players we have...

KMeans clustering

I used the KMeans implementation provided the skikit-learn package. It is very simple to use:

# Input observations

X = match_times

# Import the KMeans class

from sklearn.cluster import KMeans

# Initialize the KMeans object with 6 clusters

KM = KMeans(n_clusters = 6)

# Identify clusters in the data set by calling the fit method of the Kmeans class:

KM.fit(X)

# Now the KMeans object contains the cluster identity of each observation in

# the variable called labels_ We can print it to see the values:

print(KM.labels_)

I processed the battle submission times for each player into a set of five quantiles evenly spaced between 0 and 1. The quantile numbers now represent for example the upper bound of the player's 16.7% fasted submitted battles, the upper bound of the 33% fastest, etc.. With 5 quantiles we have the fractions 1/6, 2/6, 3/6, 4/6 and 5/6.

The quantiles are a simplified representation of the player's submission time distribution. We can compare them among players to find out if there are good groupings of player types.

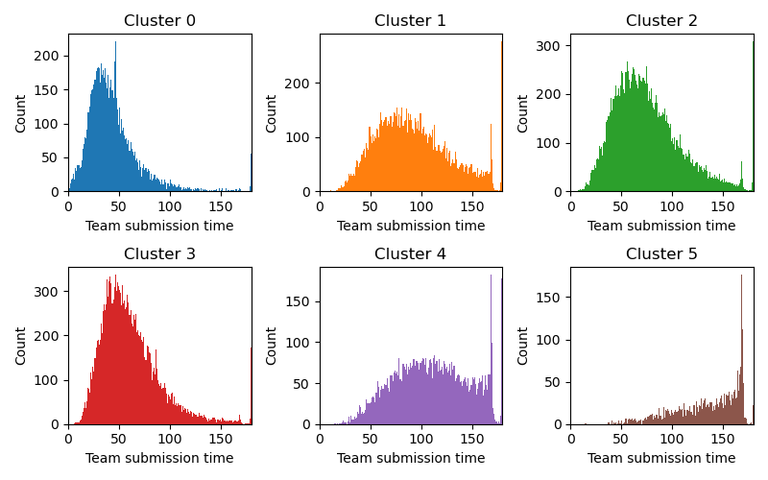

Modern player types:

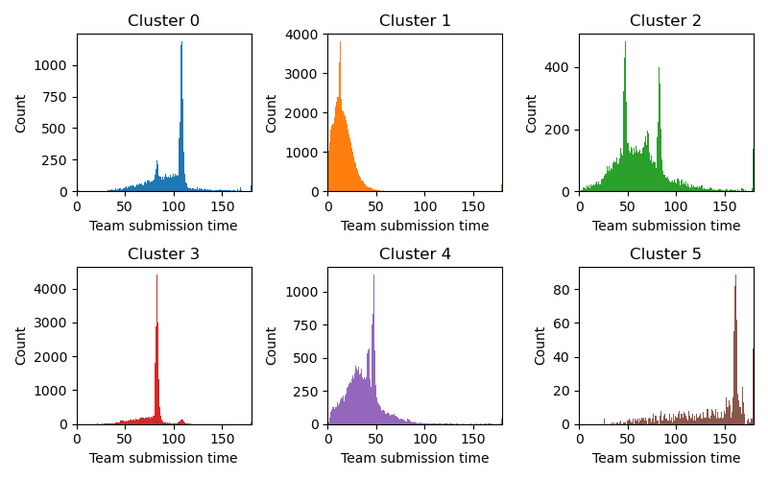

These are the six types of modern players identified by the KMeans approach:

And here are the fraction of players that are placed in each cluster:

| Cluster 0 | Cluster 1 | Cluster 2 | Cluster 3 | Cluster 4 | Cluster 5 | |

|---|---|---|---|---|---|---|

| Percentage | 0.12 | 0.19 | 0.27 | 0.27 | 0.12 | 0.04 |

Cluster 0 represent the quickest players. The most common submission time in this group is 30 seconds, and only rarely do they spend much more than a minute to submit. Clusters 2 and 3 are somewhat similar, but with most typical submission time around 50 and 70 seconds, respectively. These are also the most common groups to be in, and represent your average player. Clusters 1 and 4 are the players that drag out a bit more, and somewhat frequently run into the submission deadline. Finally, cluster five are a bunch of people that really like to use all available time to submit.

Wild player types:

Here are the types of players in wild that this method identifies:

And here are the fraction of players that are placed in each cluster:

| Cluster 0 | Cluster 1 | Cluster 2 | Cluster 3 | Cluster 4 | Cluster 5 | |

|---|---|---|---|---|---|---|

| Percentage | 0.09 | 0.46 | 0.09 | 0.18 | 0.16 | 0.01 |

Obviously, these groups are completely different from the modern results. Each group has a spike at a pretty specific submission time. In cluster 0, its at 106 seconds, cluster 1 has a spike at 12 seconds, etc.

Final words

I found it quite fun to work with this data. Splinterlands offers a good data set to play with and test data analysis methods.

I hope you found this post interesting. If you have not yet joined Splinterlands please click the referral link below to get started.

Best wishes

@Kalkulus

Excellent @Kalkulus ! I think it's a great post.

In the wild format it is evident that these peaks must correspond to certain bot algorithms. Not necessarily all accounts that send teams at that time are bots, but it is an abnormality and therefore suspicious of being bots.

In the modern format there are no obvious peaks, except for clusters 4 and 5, where

They are clearly observed when time is ending. To me, this behavior is quite suggestive, and I assume that it is most likely bot algorithms.

It is my opinion, therefore far from being a fact, bots that cheat are located in this zone where time is running out. If you read my post: At last! The best Splinterlands update in a long time that closed a backdoor published several months ago you will understand what I mean. Now what I haven't understood is why Splinterlands now automatically sends teams before the time runs out, it's like they want to give those algorithms another chance.

In any case, if it is the use of bots in modern, which violates the TOS, it is very likely that those spikes will begin to dilute along the timeline. That is, it is very likely that they decide to randomly alter the times in which they send their teams. But, that would be a clear demonstration that the spikes do correspond to bots, and a validation of your analysis methodology.

If you continue with your detective work you have the advantage that everything has been recorded in stone, well on the blockchain. Therefore, you can analyze how many accounts have been sending teams at exactly the same delta time. It would be interesting to see if they are always the same accounts, which makes them very good candidates to be considered bot users. Although, this is not necessarily proof, it is an important element.

Again, congratulations for an excellent post and for keeping Splinterlands part of my interests and entertainment.

Greetings...

Thanks again for your feedback. I went back and read the post you linked and you make some interesting points there. Based on the last five battles, it is possible to identify who your opponent is, there is no doubt about that. If there is some way to use that to see what team is submitted.. well, that's an exploit that should be closed.

Just out of curiosity I went and looked at this small spike in the modern data (blue spike directly behind the orange spike):

(submitting around 46-47 seconds precisely).

Indeed there was a few accounts that almost exclusively submitted at this time duration. That is clearly bot behavior. However, those accounts were in Bronze, and we do have liquidity bots in modern at the moment, so I will make a guess that that's what I'm seeing here.

Unfortunately, as I understand it, Splinterlands battle data is not submitted to hive, but just directly to/from the Splinterlands servers. Because of that, I can only go back 50 battles in each format for each account.

If the anomalous spike appears in both modern and wild, then there is no doubt that they are bots. That just means your analysis methodology is on track.

If, as you say, they are Splinterlands bots, they are not a problem, but at the end of the day they are bots, and it shows that good or bad bots can be identified with this methodology.

What can be very important is that the battle data you are using is updated, because the last 50 battles could include data from months ago when bots were still allowed in both formats.

Even if you can't pull battle data from the Splinterlands servers, you might want to create your own script to get that data firsthand.

It occurs to me that you could create your own version of a crawler and in this way obtain first-hand and permanently updated data. For example, you could create a trigger with your own account by starting a battle. Next, you read your battle history to find the name of the user you just battled. You use this user as a starting point for your crawler, reading the battle history of said user. Here you define what your analysis time window will be, let's say 24 hours. Thus, you get from that user's history the battles of the last 24 hours as well as the users with whom he battled. With each one of those user you repeat the same procedure and "Voilà", you get in theory all the players who have battled in that format during the last 24 hours.

The advantages of using a crawler is that you get completely updated data, which you can save in a database for future analysis. The disadvantage is that you need to keep the script running permanently or with a crontab that executes it at a specific time.

Finally, thinking about the spikes that occur at the end of the timeline, I realize that this data may be obfuscated because Splinterlands automatically submits the teams when the time ends. But even so, I am convinced that this area is where the bots are most likely to be found...

Thanks for sharing! - @alokkumar121